Many pharmaceutical manufacturers strive for continued product verification, but there are many advantages to focusing on continuous process control instead.

Many pharmaceutical manufacturers are working towards continued process verification to live up to health authority expectations. Continued means an ongoing effort after validation, but it can be based on sampling. Verification is a passive act, where the process is estimated to live up to specifications without any adjustment of process parameters.

However, in many cases, the current method is to do continued product verification where product critical quality attributes (CQA) are tested after processing, like in classical batch release testing. Ideally, it is best to have continuous process verification and control where process parameters are adjusted to keep processes on target.

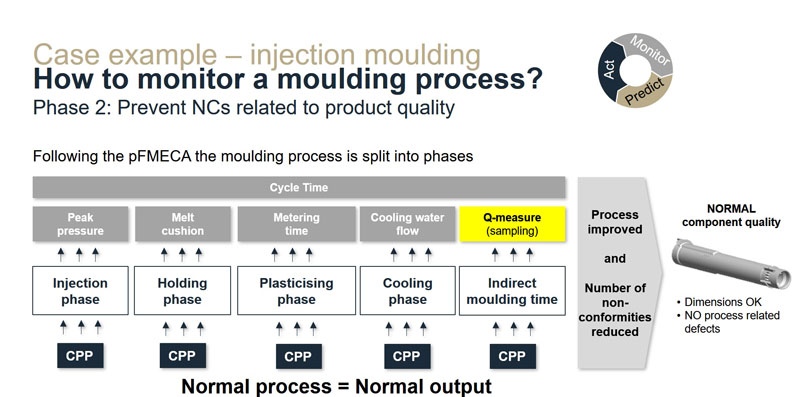

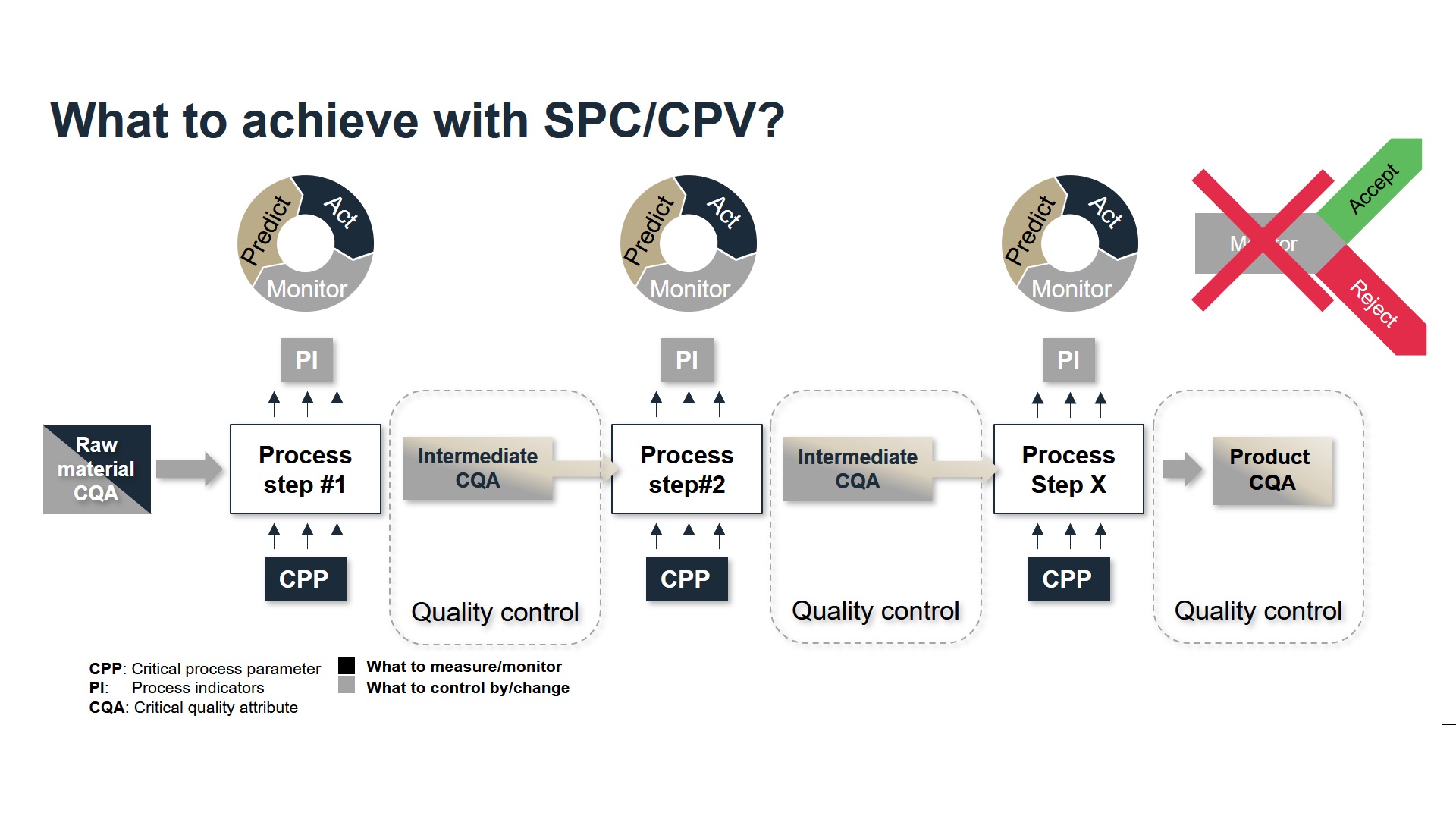

This ideal state is illustrated in Figure 1. Each unit operation is continuously monitored, the quality is predicted and proper action taken if this prediction is bad.

Figure 1: For each unit operation PIs that can predict CQA and CPPs that can control PIs shall be found and their relations established.

To achieve this ideal state, the following improvements are needed to conduct continued product verification:

- Aim for process verification to get early feedback and easily identify causes

- Aim for continuous verification to always verify the process

- Aim for process control where the measured process signals are used as a decision criteria for changing process settings

Challenges of shifting from continued product verification to continuous process control

Some of the challenges of moving from continued product verification to continuous process control are:

- What to monitor?

- When to act?

- How to act?

1. What to monitor?

A series of process indicators (PI) are necessary for real-time monitoring of a process. A PI is something that can be measured in real-time and correlates with CQAs. Typically a set of PIs is needed to get a good prediction of CQAs. Also, the critical settings of the process (here called critical process parameters (CPP)) are often included in the predictive models transfer function F:

Predicted CQA=F(CPP1,…CPPn ,PI1….PIm)

On top of having identified PIs and CPPs, their relation to CQAs needs to be established. The recommended procedure is to perform a design of experiment (DoE), where CPPs are systematically varied and CQAs and PIs are measured as responses.

By conducting a DoE with uncorrelated CPPs, a correlation between CPP and CQA or PI means a casual effect and inflation of estimation errors of model effects are prevented. The other advantage of a DoE is that a large variation of CPPs, PIs and CQAs is obtained. To fully explore the correlation between variables they need to vary.

If a DoE is not possible relations can be built on historical data, but this comes at the risk of:

- Mixing up correlations and causations

- Large estimation errors on model effects in case of correlated CPPs

- Not sufficient variation to see correlations

When the prediction model has been cross-validated, it can be basis for establishing the process design space to operate in. It can also be used as a performance based approach for control as described in ICH Q12. The predicted CQA can be used as in-line release testing, replacing product testing. See case at the end.

When to act?

Based on the transfer function between CQAs and PIs, specifications on CQAs can be broken down to specifications on PIs. However, since several PIs typically exist in the transfer function, this can be done in multiple ways. If a larger tolerance is given to one PI, narrower tolerances must be given to the other PIs. A simpler approach might be to look at trends in the predicted CQA and use specification limits for the CQA narrowed by the prediction uncertainty, e.g. 2 times root mean squared error (RMSE).

However, specification limits (reflecting voice of the customer) on predicted CQA should not be used as action limits. Acting when OOS happens, i.e. faulty products, is by far too late. Instead it is recommended to get a warning, when the predicted CQA is outside the normal operating window (reflecting voice of the process), which must be inside the specification window. In this way, an alert is triggered before it is too late. The normal operating window is classically found by making a control chart. A control chart is a trend curve with warning limits centered around the mean or the target, where there is only alpha risk of getting a false alert. Alpha is a low number, typically 0.00135. For normal distributed processes, the limits then become:

where Z is the normal quantile.

However, there are several issues with classical control charting:

- It assumes that the true mean and standard deviation of the process is known. However, typically there is only a limited data set from which the estimation of mean and standard deviation is made.

- It assumes that predicted CQA can be described by a simple model with a single normal distribution. However, most processes are too complex to be described with a single normal distribution. Due to e.g. between batch variation and drift within a batch, a variance component model is needed. In addition, there can also be systematic factors like production units if parallel processing is done.

These issues can be solved by creating a statistical model of the process with random factors like batch and timepoint within a batch and if needed systematic factors like production units. From this model, future measurements can be predicted. Prediction from statistical models is standard functionality in statistical software packages. Predictions are based on the t-quantile instead of the normal quantile and thereby takes uncertainty in estimation in to consideration.

Thus, the recommendation is to use trend curves on predicted CQAs and act if observations are outside prediction limits. An obvious validation criterion is that prediction limits must be inside specification limits, so alerts are obtained before an OOS appears.

How to act?

If observations are outside prediction limits the process has changed compared to the validated state. In case of a sufficient distance to specification limits, it could be considered to update prediction limits. Alternatively, the CPP settings should be changed to bring the predicted CQA back on track. The transfer function that relates predicted CQA to CPPs should contain the information on how to act.

Case 1: Real-time release testing based on DoE

A manufacturer of medical devices uses injection molding to make components for the device.

- 50-100 units per production site

- Extremely large volumes and low manning

- 100% part inspection not considered realistic

So, despite an intensive component QC sample inspection, there is a risk that random defects are not found.

The injection molding process was divided into phases and for each phase PIs (grey) and CPPs (dark blue) were found as shown in Figure 2. DoEs were made to establish relations between CPP, PIs and CQAs (dimensions and defects). Based on these relations the products testing has been replaced by in-line release testing on PIs.

Figure 2: CPP and PIs for injection molding process.

The major outcomes of implementing in-line release testing have been:

- 2 MEUR/year saved in IPC hours

- Drastically reduced risk of approving random errors

- Validation time reduced from 20 weeks to 3 weeks

- Scrap rate reduced 70%

- OEE increased 7%

Case 2: Process optimization and control based on historical data

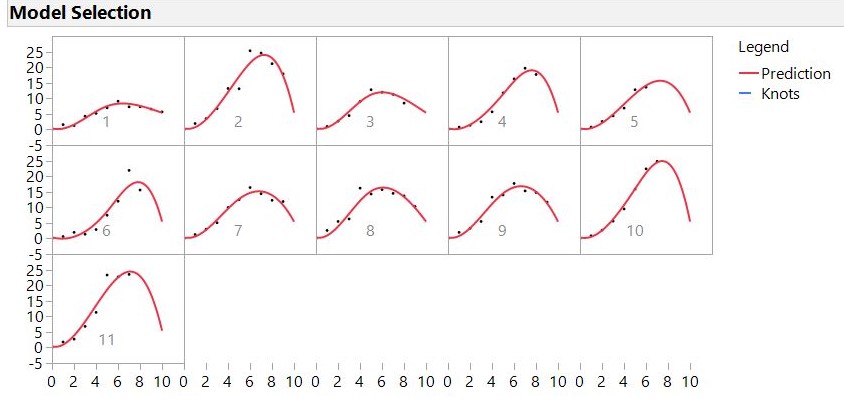

A manufacturer had yield issues after upscaling. The first 11 pilot runs after upscaling were analyzed for correlations between yield, PIs and CPPs. The challenge here was that CPPs (e.g. feed) and PIs (e.g. glucose consumption and lactate production) are not numbers as in part production, but are functions of time as shown in Figure 3.

Figure 3: PI development over time for 11 pilot runs.

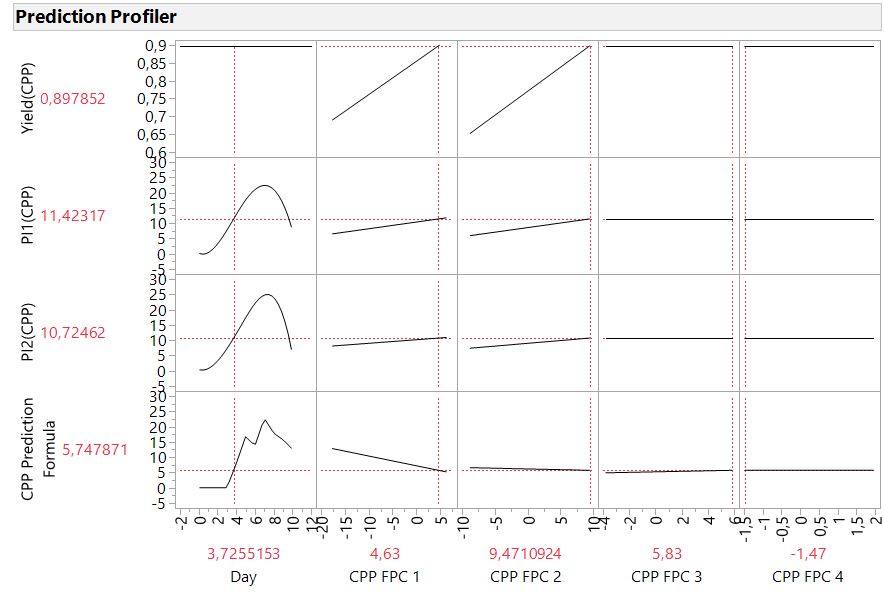

This issue was solved used the functional data explorer in JMP from SAS, where functional principal components (FPC) of the curve fits are used to describe the curves. Relations between yield and PI FPCs where found using partial least squares modelling (due to heavily correlated PIs) and standard least squares modeling where used to establish relations between PIs and CPPs. The combined relations are shown in the profiler Figure 4. This profiler shows the optimal PI curves (target during production) and how to obtain these curves by changing CPP. After using the PI curves to decide the CPPs the manufacturer has obtained:

- 30 % increase in average yield

- 70% reduction in yield variation from batch to batch

Figure 4: Relations between Yield, PIs and CPPs for functional data including FPCs

Conclusions

Continuous process control has many advantages compared to continued product verification. By doing the verification continuously there is a low risk of overseeing random errors compared to sampling. Knowing the relations between CQAs and PIs forms the basis for real time release testing. Process control is an active act where process parameters are adjusted to keep process quality on target, compared to process verification where it is only verified that it is inside specification.

By having processes on target, not just inside specifications, a much more robust manufacturing process is obtained. Continuous process control is also a pre-requisite for continuous processing which is becoming increasingly popular due to smaller foot print and investments, shorter processing times and increased flexibility.